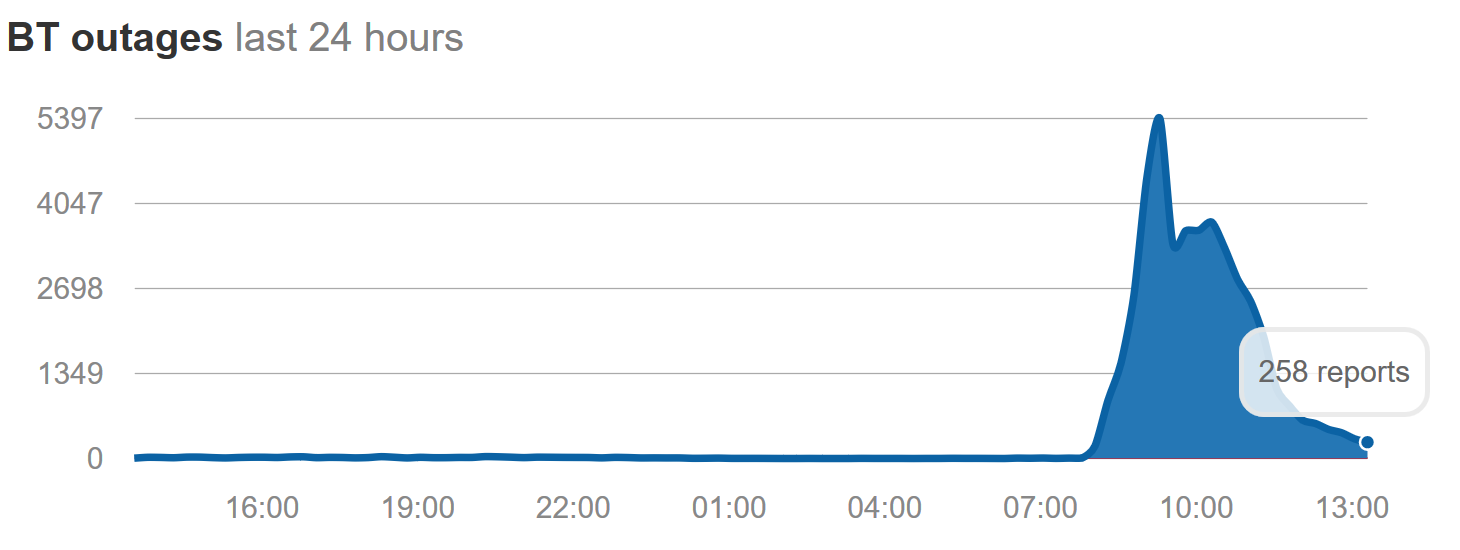

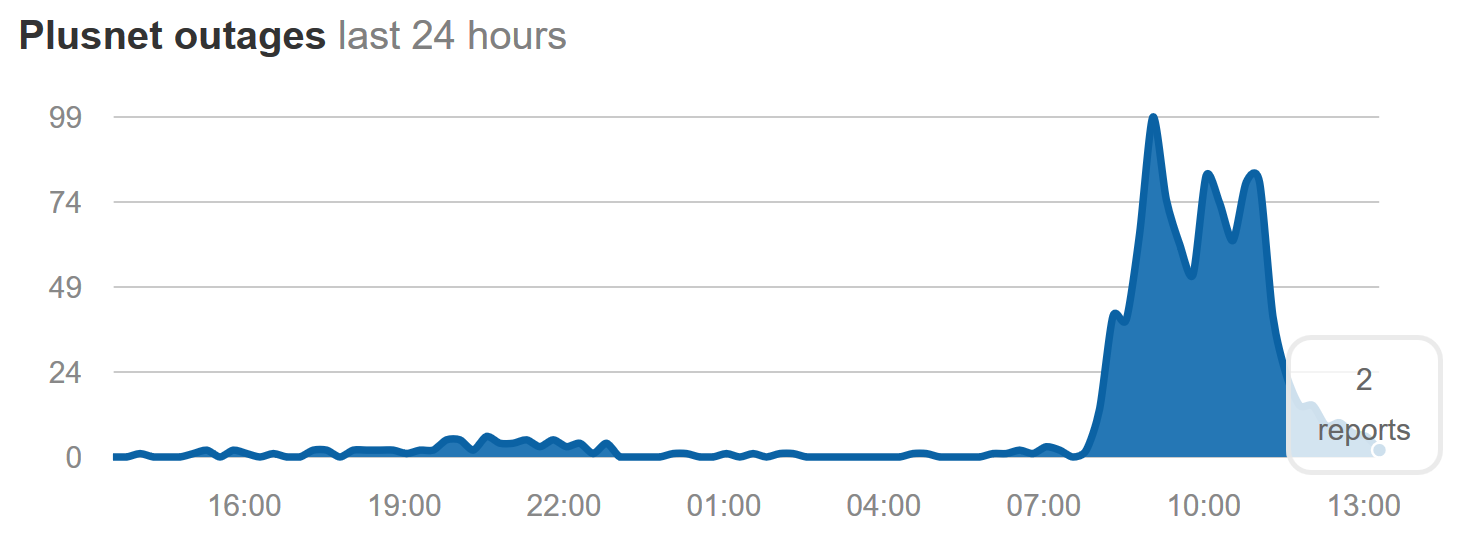

Earlier today (July 20th), thousands of BT Broadband and PlusNet Broadband customers were unable to access large chunks of the internet for up to four hours.

The cause of this outage has been attributed to “power issues at a partner’s site” by both BT and PlusNet:

https://twitter.com/BTCare/status/755708190420066304

https://twitter.com/plusnethelp/status/755716994725453824

No further details are forthcoming from BT/PlusNet at this time, although rumors have emerged that it was a “DNS outage”. DNS servers convert domain names (which are understandable names of websites) to IP addresses (which are necessary for your device to download a webpage).

I do not believe this issue to be DNS-specific, as bypassing BT/PlusNet DNS servers and using Google’s Public DNS servers instead during the outage didn’t resolve the connectivity issues (which it should have done, had it been an isolated problem with BT/PlusNet’s DNS servers)

Also, BT Broadband and PlusNet use different DNS servers:

| BT Broadband DNS | 62.6.40.178 62.6.40.162 194.72.9.38 194.72.9.34 194.72.0.98 194.72.0.114 194.74.65.68 194.74.65.69 |

| PlusNet DNS | 212.159.13.49 212.159.13.50 212.159.6.9 212.159.6.10 |

…so unless BT and PlusNet’s DNS servers are physically located in the same building and supplied by the same power source, a DNS problem is unlikely to be the root cause.

During the outage, DNS lookups were correctly resolving (using Google’s Public DNS), but it was not possible to “ping” or “tracert” to a large proportion of websites.

It was therefore not specifically a “DNS outage”, but rather a loss of power in other critical UK routing infrastructure.

If that’s the case, then it’s very concerning that there doesn’t appear to have been functional UPS (Uninterruptible Power Supply)/Back Up power generators to ensure a continuation of power to this piece/pieces of critical network/routing infrastructure!

This may also be why BT/PlusNet are being quite vague as to the exact location of their “partner’s site” where these “power issues” arose today, as this information could be of use to terrorists wishing to attack/cripple the UK’s internet infrastructure.

This issue highlights just how fragile UK internet infrastructure is, and how a single point of failure can cause such a widespread outage.

Earlier this week, BT’s Openreach (the arm of BT responsible for UK broadband infrastructure) were heavily criticized by MP’s for poor performance under investment in broadband infrastructure. This lack of investment could well have been a contributing factor in today’s major UK outage.

The UK Government’s proposed “Broadband Universal Service Obligation” – which states that every UK resident has the right to broadband speeds >10Mbps can’t come soon enough, as this would force companies like BT Openreach to invest in broadband technology and infrastructure in the UK!

UPDATE 1:

According to the BBC, BT’s problems were caused by a 20 minute power cut lasting from 07:55 to 08:17 BST at a data centre in London’s docklands. The fault was at Telecity, one of the data centres for The London Internet Exchange (LINX) – which is one of the world’s biggest internet nodes.

A spokesman told the BBC: “We take any outage very seriously. We will be having very serious conversations with Telecity about how this happened.”

But he dismissed the idea that the incident had shown up the vulnerability of the internet’s architecture in the UK.

“This was not the internet stopping – there are other routes for traffic to flow, including our own. Over 80% of our traffic continued to flow and it immediately started to recover even before the power was restored”

This still doesn’t explain how a power outage was allowed to happen at Telecity in the first place – Uninterruptible Power supplies, Backup Generators, and redundant routing are all designed to prevent just such an occurrence, yet in this case don’t appear to have been present/working!

UPDATE 2:

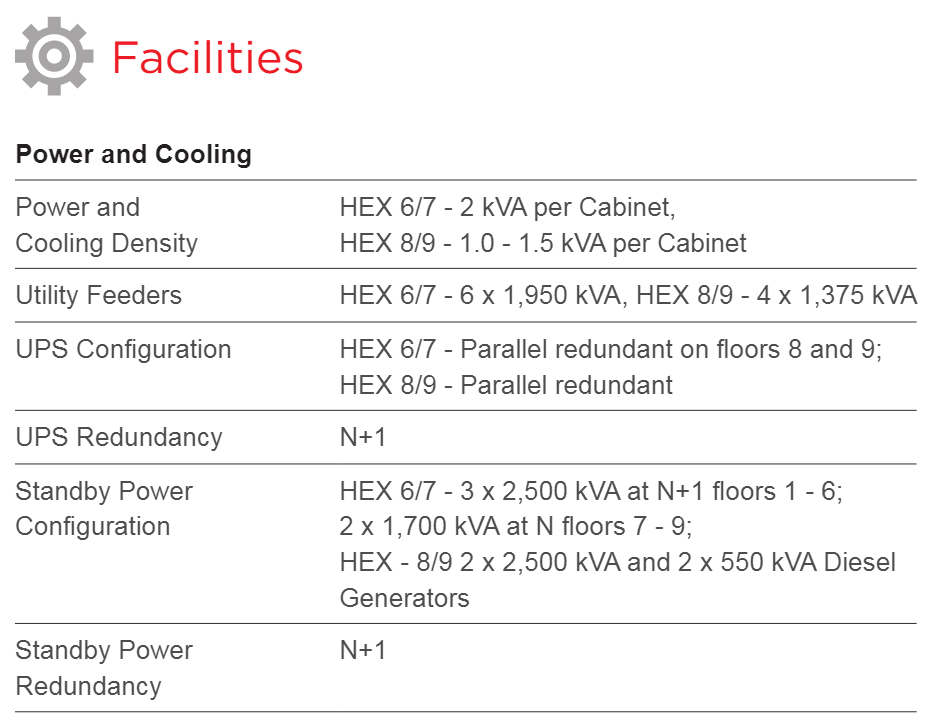

The Register are reporting Telecity’s owner, Equinix, have confirmed a power issue “with one of [their] UPS systems at 8/9 Harbour Exchange (LD8)” at their site in London Docklands. Whilst the company have assured their customers that they will carry out a fully investigation as to the causes of this issue and “provide a further update once the investigation is complete”, it’s still a little perplexing that an isolated incident with a single UPS system could have such devastating national effects – especially given the backup/standby power systems supposedly in place at LD8:

UPDATE 3 – 21st July

In a near identical repeat to yesterday, many BT Broadband and PlusNet customers are again this morning suffering connectivity issues:

https://twitter.com/BTCare/status/756032905361690624

https://twitter.com/plusnethelp/status/756036423422279684

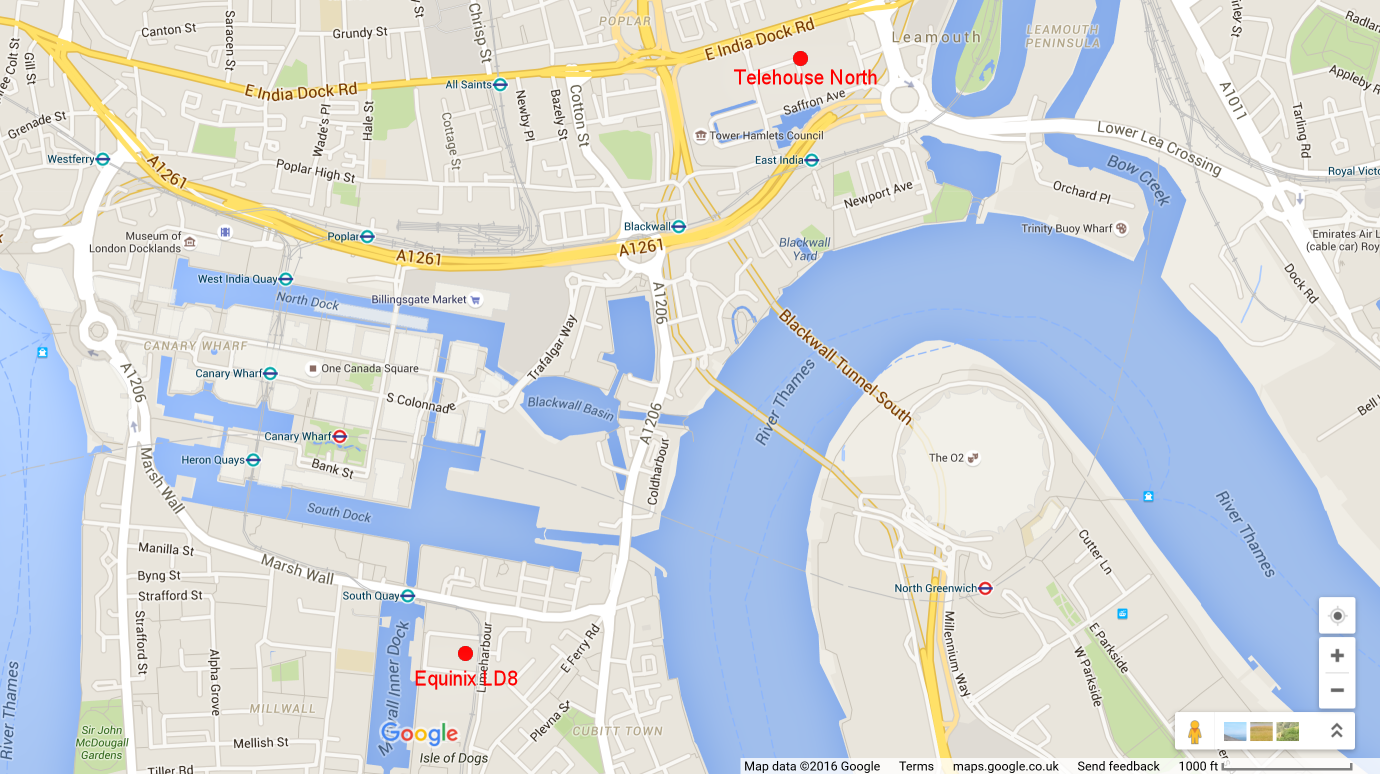

BT are reporting that today’s power outage is at Telehouse North in London, a separate Data Center less than 2 miles from the location of yesterday’s outage at Equinix LD8:

In what BT are describing as a “major outage“, today’s power failure has been traced to the 3rd floor at TFM10 Telehouse North and occurred at around 7:45am this morning.

Two apparently separate power outages just 2 miles and 24 hours apart affecting the same infrastructure? – Coincidence …or something more sinister?

Some possibilities:

1) Over the past few days London has basked in a mini heat-wave with temperatures reaching 35°C. Whilst Data Centers will of course have air cooling/conditioning systems in place to keep equipment running cool, the hotter the outside ambient temperature, the more power is required by these air cooling/conditioning systems in order to maintain the same temperature within the Data Center. This increase in demand for power for air cooling/conditioning could have meant there wasn’t enough remaining power for IT hardware/infrastructure to function correctly. This is hard to believe though, as Data Centers should have made provisions for this and have redundant/backup power systems that can automatically kick in the event of power loss.

2) Given the close proximity of the two Data Centers, it’s possible that a fault (such as an over/under voltage) in the external electricity supply for the local area caused damage to internal power/UPS infrastructure in the two data centers. Again, this is somewhat unlikely as protection/filtering should have been in place to protect equipment against such faults.

3) Telecommunication providers and/or the UK Government are conducting “resilience” testing of UK Broadband infrastructure to better understand and plan for a terrorist attack or other major event which could seriously impact on communications. Now, as the affected ISP’s such as BT Broadband and PlusNet seem to have been taken by surprise at these outages, it’s unlikely that such tests were conducted without their knowledge…. of course, though, if these were “resilience” tests which have not gone very well, it’s easier for relevant parties to blame a simple “power outage” rather than to admit to failing a resilience test!

Regardless of the respective root causes of the two power outages – whether related or not – these two incidents highlight significant weaknesses in the UK’s broadband routing and infrastructure!

Serious questions need to be put to both Equinix and Telehouse as to why a power failure can bring their respective infrastructures to their knees, especially when both data centers boast multiple power sources & backups:

Here are the power specs for Equinix’s LD8 Data Center:

…and here are the power specs for Telehouse’s North Data Center:

UPDATE 4

A statement from Telehouse North, a connection partner of BT and Plusnet broadband services, reveals the specific cause of today’s outage:

“We are aware that there has been an issue with the tripping of a circuit breaker within Telehouse North that has affected a specific and limited group of customers within the building. The problem has been investigated and the solution identified. Our engineers are working with our customers on the resolution right now. We will release updates in due course.“

Equinix also earlier released a statement relating to their separate outage yesterday:

“Equinix experienced a brief power outage at the former Telecity LD8 site in London on Wednesday, July 20 at 7:55 am BST. This impacted a limited number of customers and service was promptly restored. Equinix engineers have diagnosed the root cause of the issue as a faulty UPS (uninterrupted power supply) system and are working with our customers to minimise the impact.“

Russell Poole, Managing Director of Equinix UK is also keen to distance Equinix from today’s unrelated issue at Telehouse North, commenting “As of Thursday morning there have been no further incidents at Equinix sites, however we are continuing to monitor the situation closely.”

So it would appear that a single faulty power supply and a single tripped circuit breaker have affected internet access for over a million people across the UK in the past two days! (BT Broadband alone have over 10 Million customers, and claim yesterday’s issues affected around 10% of their customers)

The debate now firmly focuses on one key question: Just how resilient is critical UK telecommunications infrastructure?

UPDATE 5 – 28th July

The fallout from last week’s outages continue! It’s now emerging that over one hundred IKEA customers who shopped last Thursday (21st July) have had their credit/debit cards charged TWICE!

IKEA spokeswoman Donna Moore, told The Metro:

“We can confirm that following a BT network outage on Thursday, which caused a fault on our payment service platform, 102 duplicate payment transactions took place across all IKEA stores in the UK.

We are actively working with the payment service provider to rectify these transactions as quickly as possible so that those affected will have the duplicate payments released back to them by their banks.“

A BT spokesman also confirmed that this appeared to be linked to the outage at Telehouse North last Thursday.